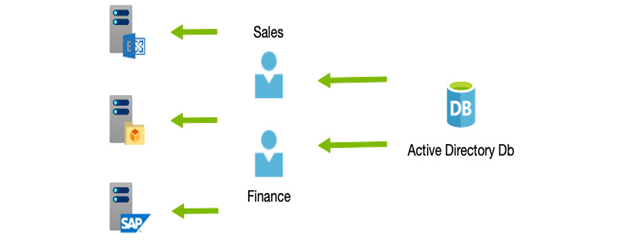

In today’s environments that often expand beyond an organization’s network into the cloud, controlling access while still enabling users to access their resources becomes more complicated.

An additional complication is the fact that different users may have other requirements. For example, a system’s administrators most definitely need the most secure access policies in place. In contrast, an account that will always have more limited access anyway may not need quite as stringent measures because they won’t be accessing (or be granted access to) particularly risky systems should they be compromised.

Another example is where a user is signing in from—if a user is on the corporate network, you already have physical boundaries in place; therefore, you don’t need to be as concerned as a user accessing from a public network.

You could argue that you should always take the most secure baseline; however, the more security measures you introduce, the more complex a user’s sign-on becomes, which in turn can impact a business.

It is, therefore, imperative that you get the right mix of security for who the user is, what their role is, the sensitivity of what they are accessing, and from where they are signing in.

MFA

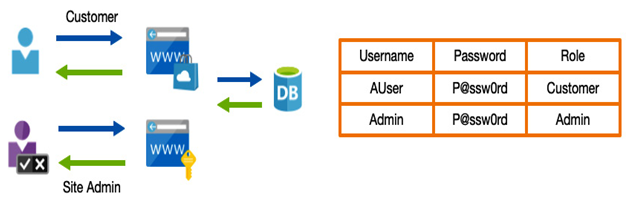

Traditional usernames and passwords can be (relatively) easily compromised. Many solutions have been used to counteract this risk, such as requiring long and complex passwords, forcing periodic changes, and so on.

Another, more secure way is to provide an additional piece of information—generally, one that is randomly generated and delivered to you via a pre-approved device such as a mobile phone. This is known as MFA.

Usually, this additional token is provided to the approved device via a phone call, text message, or mobile app, often called an authentication app.

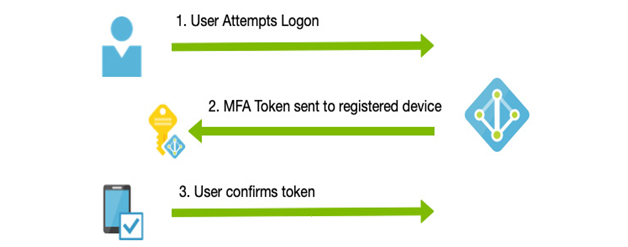

When setting up MFA, a user must register the mobile device with the provider. Initially, a text message will be sent to verify that the user registering the device owns it.

From that point on, authentication tokens can only be received by that device. We can see the process in action in the following diagram:

Figure 3.13 – MFA

Microsoft Azure provides MFA for free; however, the paid tiers of Azure AD offer more granular control.

On the Azure AD free tier, you can use a feature called Security Defaults to enable MFA for ALL users, or at the very least on all Azure Global Administrators when Security Defaults isn’t enabled. However, the free tier for non-global administrators only supports a mobile authenticator app for providing the token.

With an Azure AD Premium P1 license, you can have more granular control over MFA through the use of CA, which allows you only to use MFA based on specific scenarios—for example, when accessing publicly over the internet, but not from a trusted location such as a corporate network.

Azure AD Premium P2 extends MFA support by introducing risk-based conditional access. We will explore these concepts shortly, but first we will delve into what is available with Security Defaults.