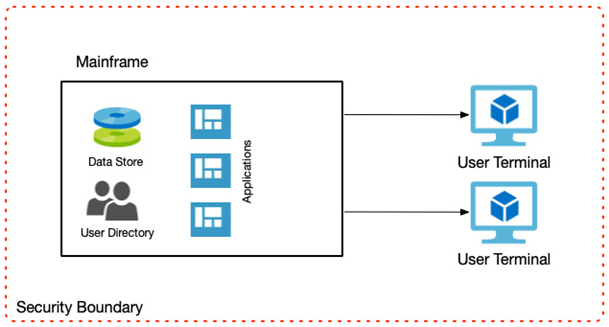

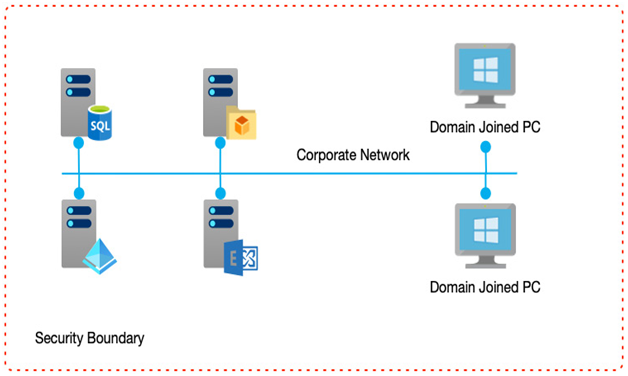

Preventing access to systems from non-authorized networks is, of course, a great way to control access. In an on-premises environment this is generally by default, but for cloud environments, many services are open by default—at least from a networking perspective.

If your application is an internal system, consider controls that would force access along internal routes and block access to external routes.

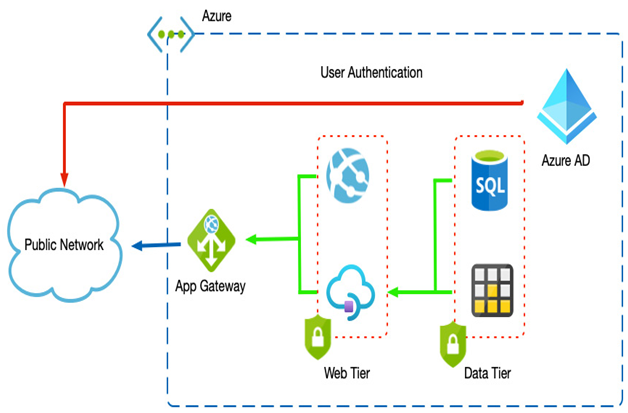

If systems do need to be external-facing, consider network segregation by breaking up the individual components into their networks or subnets. In this scenario, your solution would have externally facing user interfaces in a public subnet, a middle tier managing business rules in another subnet, and your backend database on another subnet.

Using firewalls, you would only allow public access to the public subnet. The other subnets would only allow access on specific ports on the adjacent subnet. In this way, the user interface would have no direct access to the databases; it would only be allowed access to the business layer that then facilitates that access.

Azure provides firewall appliances and network security groups (NSGs) that deny and allow access between source and destination services, and using a combination of the two together provides even greater control.

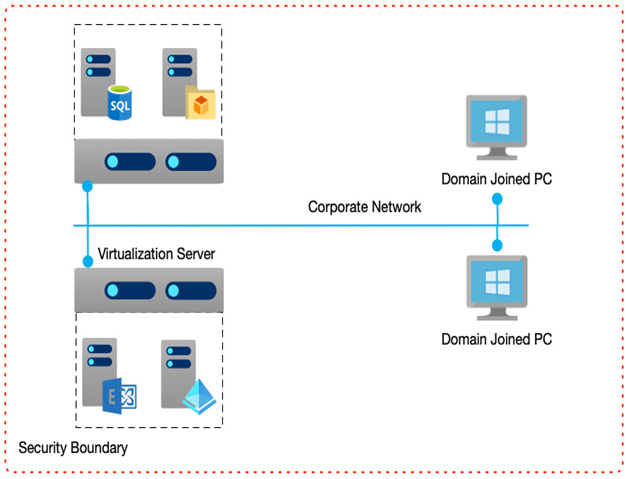

Finally, creating a virtual private network (VPN) from an on-premises network into a cloud environment ensures only corporate users can access your systems, as though they were accessing them on-premises.

Network-level controls help control both perimeter and internal routes, but once a user has access, we need to confirm that the user is who they claim to be.

Identity management

Managing user access is sometimes considered the first line of defense, especially for cloud solutions that need to support mobile workforces.

Therefore, you must have a well-thought-out plan for managing access. Identity management is split into two distinct areas: authentication and authorization.

Authentication is the act of a user proving they are who they say they are. Typically, this would be a username/password combination; however, as discussed in the hacking techniques section How do they hack?, somebody could compromise these.

Therefore, you need to consider options for preventing these types of attacks, as either guessing or capturing a user’s password is a common exploit. You could use alternatives such as Multi-Factor Authentication (MFA) or monitoring for suspicious login attributes, such as from where a user is logging on.

Once a user is authenticated, the act of authorization determines what they can access. Following principles such as least privilege or Just Enough Access (JEA) ensures users should only access what they require to perform their role. Just-in-Time (JIT) processes provide elevated access only when a user needs it and remove it after a set period.

Continual monitoring with automated alerting and threat management tools helps ensure that any compromised accounts are flagged and shut down quickly.

Using a combination of authorization and authentication management and good user education around the danger of phishing emails should help prevent the worst attacks. Still, you also need to protect against attacks that bypass the identity layer.