To set up CA, you first need to disable Security Defaults. To perform this, follow these steps:

- Navigate to the Azure portal at https://portal.azure.com.

- In the top bar, search for and select Active Directory.

- On the left-hand menu, click Properties.

- At the bottom of the page, click Manage Security Defaults.

- A side window will appear, with an option to disable Security Defaults.

The next step is to purchase AD licenses, as follows:

- Navigate to the Azure portal at https://portal.azure.com.

- In the top bar, search for and select Active Directory.

- On the left-hand menu, click Licenses.

- On the main page, click Manage your purchased licenses.

- Click Try / Buy.

- A side window will appear; click Purchase services.

- This will open a new browser window: the Purchase Services page of the Microsoft 365 admin center (admin.microsoft.com).

- Scroll to the bottom of the page and select Security and Identity under Other categories.

- Select Azure Active Directory Premium P2.

- Click Buy.

- Choose whether to pay monthly or pay for a full year and select how many licenses you need. Click Check out now.

- Complete the checkout process.

Once you have completed the checkout process, you need to assign your licenses to your users, as follows:

- Navigate back to the Azure portal at https://portal.azure.com.

- In the top bar, search for and select Active Directory.

- On the left-hand menu, click Licenses.

- On the main page, click Manage your purchased licenses.

- Click All products.

- Click Azure Directory Premium P2 (your licenses count has increased by the number of licenses you have purchased).

- Click Assign.

- Click Users.

- In the side window that appears, select the user(s) you wish to assign licenses to. Click Select.

- Click Assignment options.

- In the side window that appears, set your license options and click OK.

- Click Assign.

With Security Defaults disabled and your premium licenses assigned, you can now configure CA policies, as follows:

- Navigate to the Azure portal at https://portal.azure.com.

- In the top bar, search for and select Azure AD Conditional Access.

- On the left-hand menu, click Named locations.

- Click + New location.

- On this page, you can define specific countries or IP ranges that users can sign in from. Click the back button in your browser to return to the CA page, or click the CA breadcrumb.

- On the left-hand menu, click Policies.

- Click New Policy.

- Enter Managers under name.

- Click Users and groups.

- Click the Users and groups checkbox, and then select a user. Click OK.

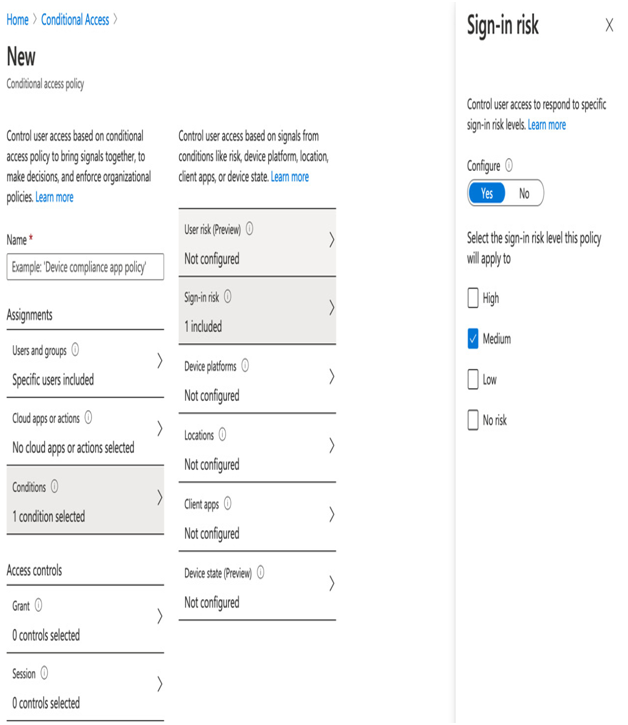

- Click Conditions. You can choose different risk profiles depending on your needs—for example, click Sign-In risk, and then click the Medium checkbox, as in the following example. Click Select:

Figure 3.14 – Assigning risk policies

- Choose the action to perform when the policy is triggered. Click Grant under Access controls, and then click the Require multi-factor authentication checkbox. Click Select.

- Set the Enable policy to Report-only.

- Click Create.

In this example, we have created a simple access policy that only applies to a single user. The policy will trigger if the user’s activity is deemed medium risk, and will then enforce MFA. However, we set the policy to Report-only, meaning that the actual MFA enforcement won’t take place. This is advised when first creating policies in order to ensure that you do not inadvertently lock users out. In other words, it gives you the ability to test policies before applying them.

In this section, we examined how to provide greater control over the user authentication process for internal users. Sometimes, however, we will want to provide access to external users.