As we have already mentioned, one of the benefits Azure AD provides is self-service password resets—that is, the ability for users to reset their passwords by completing an online process. This process results in the user’s credentials being reset in the cloud—however, if you have hybrid scenarios with those same accounts, you would typically want to have a password reset performed in the cloud to write that change back to the directory.

To achieve this, we use an optional feature in AD Connect called Password Writeback.

Password Writeback is supported in all three hybrid scenarios—PHS, PTA, and AD Federation.

Using Password Writeback enables enforcement of password policies and zero-delay feedback—that is, if there is an issue resetting the password in the AD, the user is informed straightaway rather than waiting for a sync process. It doesn’t require any additional firewall rules over and above those needed for AD Connect (which works over port 443).

Note, however, that this is an optional feature, and to use it, the account that AD Connect uses to integrate with your AD must be set with specific access rights—these are the following:

- Reset password

- Write permissions on lockoutTime

- Write permissions on pwdLastSet

AD Connect ensures that the user login experience is consistent between cloud and hybrid systems. However, AD Connect has an additional option—the ability to enable a user already authenticated to the cloud without the need to sign in again. This is known as Seamless SSO.

Seamless SSO

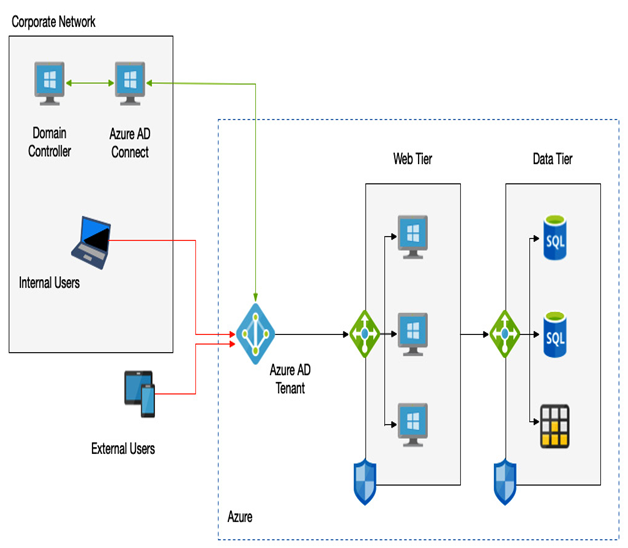

Either PHS or PTA can be combined with an option called Seamless SSO. With Seamless SSO, users who are already authenticated to the corporate network will be automatically signed in to Azure AD when challenged.

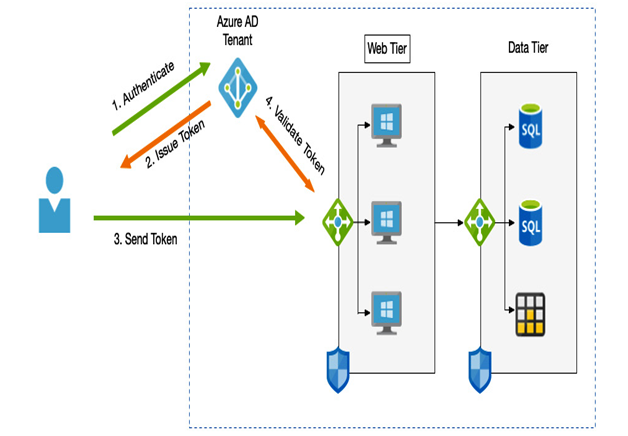

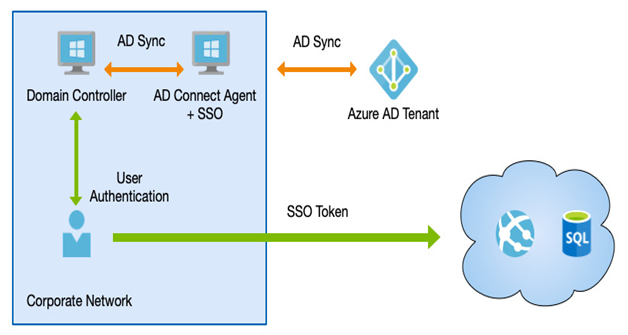

As we can see in the example in the following diagram, if you have a cloud-based application that uses Azure AD for authentication and you have Seamless SSO enabled, users won’t be prompted again for a username and password if they have already signed in to an AD:

Figure 3.11 – SSO

However, it’s important to note that Seamless SSO is for the user’s device that is domain-joined—that is, domain-joined to a network. Devices that are joined to Azure AD or Hybrid Azure AD-joined use primary refresh tokens also to enable SSO, which is a slightly different method of achieving the same SSO experience but using JSON web tokens (JWT).

In other words, Seamless SSO is a feature of hybrid scenarios where you are using AD Connect. SSO is a single sign-on for pure cloud-managed devices.