Federated authentication uses an entirely separate authentication system such as Active Directory Federation Services (AD FS). AD FS has been available for some time to enable enterprises to provide SSO capabilities for users by extending access management to the internet.

Therefore, some organizations may already make use of this, and it would therefore make sense to leverage it.

AD FS provides additional advanced authentication services, such as smartcard-based authentication and/or third-party MFA.

Generally speaking, it is recommended to use PHS or PTA. You should only consider federated authentication if you already have a specific requirement to use it, such as the need to use smartcard-based authentication.

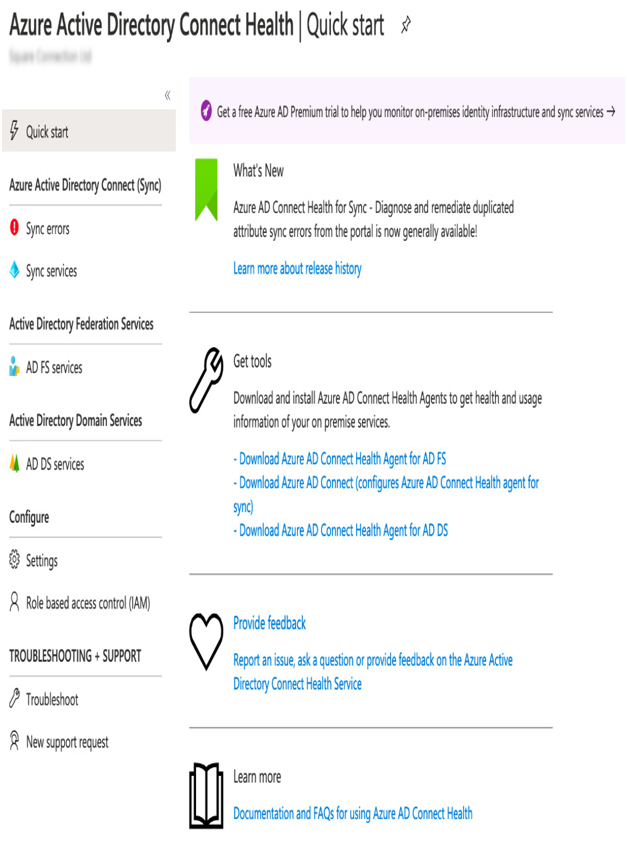

Azure AD Connect Health

Before we leave authentication and, specifically, AD Connect, we must look at one last aspect of the service: AD Connect Health.

Azure AD Connect Health provides different types of health monitoring, as follows:

- AD Connect Health for Sync, which monitors the health of your AD DS to Azure AD.

- AD Connect Health for AD DS, which monitors your domain controllers and AD.

- AD Connect Health for AD FS, which monitors AD FS servers.

There are three separate agents, one for each scenario; as with the main AD Connect agent, the download link for the AD Connect Health agent can be accessed in the Azure portal, as follows:

- Navigate to the Azure portal at https://portal.azure.com.

- In the top bar, search for and select Active Directory.

- In the left-hand menu, click AD Connect.

- On the main page, click Azure AD Connect Health under Health and Analytics.

- You will see the links to the AD Connect Health agents under Get tools, as in the following example:

Figure 3.12 – Downloading the AD Connect Health agents

The AD Connect Health blade also gives you a view on any alerts, performance statistics, usage analytics, and other information related to the AD.

Some of the benefits include the following:

- Reports on these issues:

–Extranet lockouts

–Failed sign-ins

–Privacy compliance

- Alerts on the following:

–Server configuration and availability

–Performance

–Connectivity

Finally, you need to know that to use AD Connect Health, the following must apply:

- You must install an agent on your infrastructure on any identity servers you wish to monitor.

- You must have an Azure AD P1 license.

- You must be a global administrator to install and configure the agent.

- You must have connectivity from your services to Azure AD Connect Health service endpoints (these endpoints can be found on the Microsoft website).

As we can see, Azure provides a range of options to manage authentication, ensure user details are synchronized between and cloud, and enable easier sign-on. We also looked at how we can monitor and ensure the health of the different services we use for integration.

In the next section, we will look at ways, other than just with passwords, we can control and manage user authentication.