As the software that ran on servers started to become more complex and take on more diverse tasks, it began to become clear that having a server that ran internal emails during the day but was unused in the evening and at the weekend was not very efficient.

Conversely, a backup or report-building server might only be used in the evening and not during the day.

One solution to this problem was virtualization, whereby multiple servers—even those with a different underlying operating system—could be run on the same physical hardware. The key was that physical resources such as random-access memory (RAM) and compute could be dynamically reassigned to the virtual servers running on them.

So, in the preceding example, more resources would be given to the email server during core hours, but would then be reduced and given to backup and reporting servers outside of core hours.

Virtualization also enabled better resilience as the software was no longer tied to hardware. It could move across physical servers in response to an underlying problem such as a power cut or a hardware failure. However, to truly leverage this, the software needed to accommodate it and automatically recover if a move caused a momentary communications failure.

From an architectural perspective, the usual issues remained the same—we still used a single user database directory; virtual servers needed to be able to communicate; and we still had the physically secure boundary of a network.

Virtualization technologies presented different capabilities to design around—centrally shared disks rather than dedicated disks communicating over an internal data bus; faster and more efficient communications between physical servers; the ability to detect and respond to a physical server failing, and moving its resources to another physical server that has capacity.

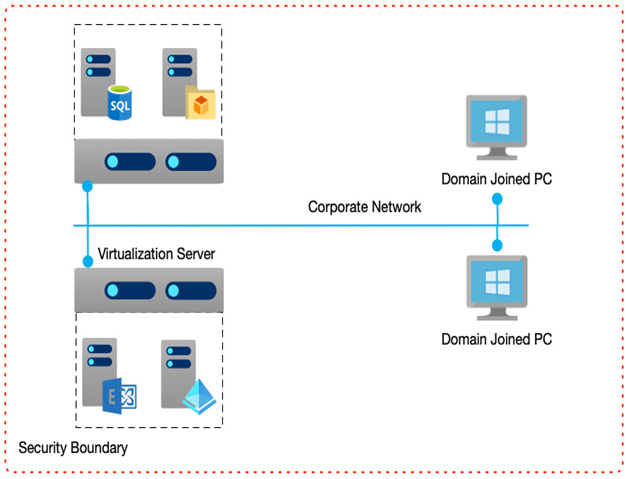

In the following diagram, we see that discrete servers such as databases, file services, and email servers run as separate virtual services, but now they share hardware. However, from a networking point of view, and arguably a software and security point of view, nothing has changed. A large role of the virtualization layer is to abstract away the underlying complexity so that the operating systems and applications they run are entirely unaware:

Figure 1.3 – Virtualization of servers

We will now look at web apps, mobile apps, and application programming interfaces (APIs).